Paper

http://proceedings.mlr.press/v130/ashutosh21a/ashutosh21a.pdf

Please cite as:

@misc{ashutosh2020bandit,

title={Bandit algorithms: Letting go of logarithmic regret for statistical robustness},

author={Kumar Ashutosh and Jayakrishnan Nair and Anmol Kagrecha and Krishna Jagannathan},

year={2020},

eprint={2006.12038},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Motivation

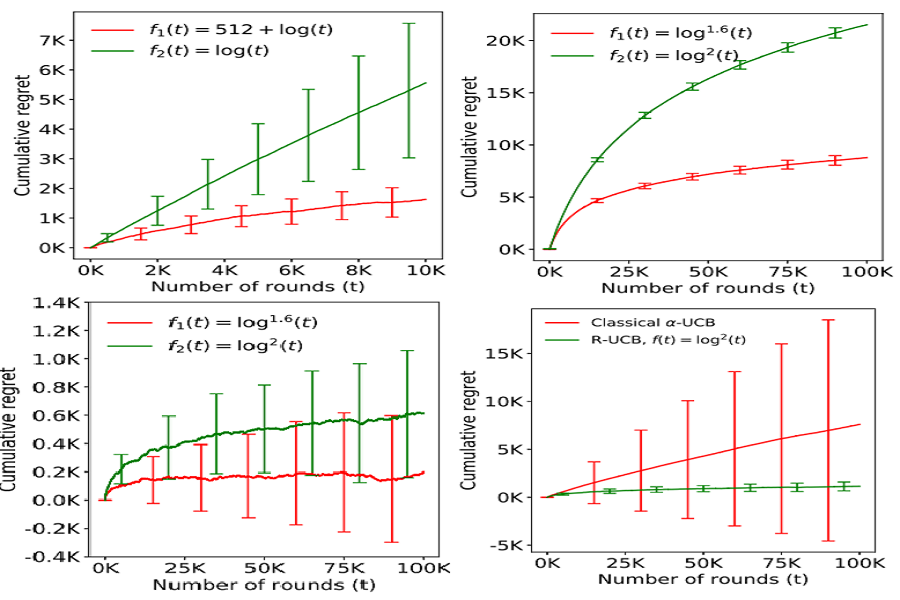

Classical Multi-armed Bandit Algorithms are designed for some fixed distribution of reward functions. In fact, in most of the algorithms, the parameters of the reward distributions are indeed ‘baked’ into the algorithm. We study the problem of MAB problems when the reward distribution is not known, or the parameters are not known a-priori. These issues are quite natural, since the parameter estimation is itself prone to noise and outliers. Our proposed algorithms achieve a regret slightly worse than logarithmic while being statistically robust.

To know more, please refer to the paper: http://proceedings.mlr.press/v130/ashutosh21a/ashutosh21a.pdf